In this tutorial, we will be looking into the top practical based Machine Learning Interview Questions & Answers in brief.

There are the some interview questions and answers for machine learning topics, crafted in a conversational and easy-to-understand manner.

So lets start with Top 33 Machine Learning Interview Questions & Answers In Short way to prepare for machine learning or Data Science Interview.

1. How can you define Machine Learning?

Answer: Machine Learning (ML) is a branch of artificial intelligence that focuses on building systems that can learn from and make decisions based on data.

Instead of being explicitly programmed to perform a task, an ML model improves its performance by learning from patterns and insights in data.

2. What do you understand by a labeled training dataset?

Answer: A labeled training dataset is a collection of data where each example has input features and an associated correct output label.

For instance, in a dataset used to train a spam filter, emails (inputs) are labeled as “spam” or “not spam” (outputs).

3. What are the two most common supervised ML tasks you have performed so far?

Answer: The two most common supervised ML tasks are:

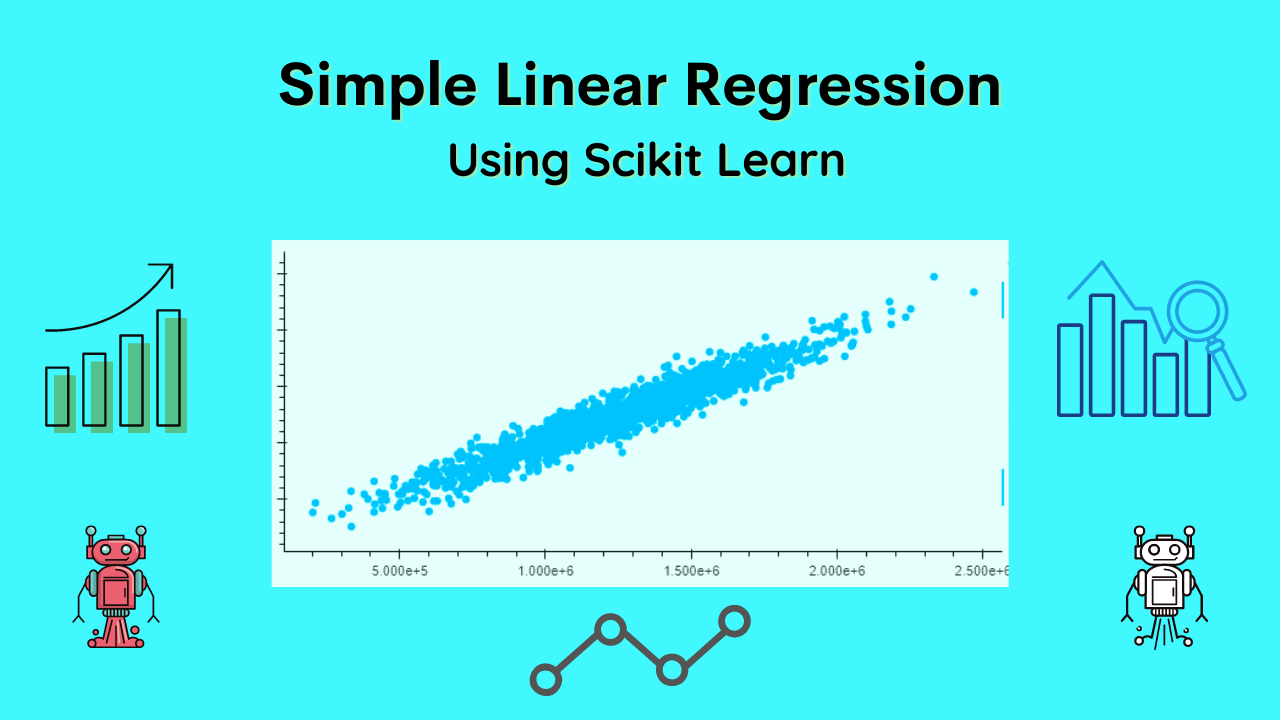

- Classification: Assigning inputs to one of several predefined categories. For example, classifying emails as spam or not spam.

- Regression: Predicting a continuous value. For example, forecasting house prices based on features like size, location, and age.

4. What kind of machine learning algorithm would you use to teach a robot to walk in various unknown areas?

Answer: Reinforcement Learning (RL) would be ideal for this task. In RL, the robot learns to navigate by receiving feedback from its actions in the environment.

It learns to maximize rewards by improving its movements over time.

5. What kind of ML algorithm can you use to segment your users into multiple groups?

Answer: Clustering algorithms, such as K-means or DBSCAN, are used for segmenting users into different groups based on their similarities. This is an example of unsupervised learning.

6. What type of learning algorithm relies on similarity measures to make a prediction?

Answer: K-Nearest Neighbors (KNN) is an algorithm that relies on similarity measures.

It predicts the output for a new instance based on the most common output among its nearest neighbors in the feature space.

7. What is an online learning system?

Answer: An online learning system updates its model continuously as new data comes in, rather than waiting for a complete dataset.

This is useful for applications where data arrives in a stream, such as stock price prediction.

8. What is out-of-core learning?

Answer: Out-of-core learning refers to techniques that enable machine learning on datasets too large to fit into a computer’s memory. This is done by processing the data in small chunks.

9. Can you name a couple of ML challenges that you have faced?

Answer: Two common challenges are:

- Data Quality: Handling noisy, incomplete, or inconsistent data.

- Model Overfitting: When a model performs well on training data but poorly on new, unseen data.

10. Can you give one example of hyperparameter tuning with respect to a classification algorithm?

Answer: For a Random Forest classifier, hyperparameter tuning might involve adjusting the number of trees in the forest, the maximum depth of each tree, and the minimum samples required to split a node.

Techniques like grid search or random search are often used for this purpose.

11. What is out-of-bag evaluation?

Answer: Out-of-bag evaluation is a technique used with ensemble methods like Random Forests.

It provides an internal estimate of model performance by using the predictions of trees that did not see each specific sample during training.

12. What do you understand by hard and soft voting classifiers?

Answer: In ensemble learning:

- Hard Voting: Each classifier votes for a class, and the class with the majority votes is chosen.

- Soft Voting: Each classifier provides a probability for each class, and the probabilities are averaged to make the final decision.

13. Suppose your ML algorithm is taking 5 minutes to train. How will you bring down the time to 5 seconds? (Hint: Distributed Computation)

Answer: To reduce training time, you could use distributed computing frameworks like Apache Spark or Dask, which allow parallel processing of data across multiple machines.

14. How to Combine All the ML Model in One Model?

Suppose you have trained five different models with the same training dataset, and all of them have achieved 95% precision. Is there any chance that you can combine all these models to get a better result? If yes, how? If no, why?

Answer: Yes, you can combine these models using ensemble techniques like stacking, bagging, or boosting. These methods can aggregate the predictions of multiple models to improve overall performance and reduce variance.

15. What do you understand by Gradient Descent? How would you explain Gradient Descent to a kid?

Answer: Gradient Descent is an optimization algorithm used to minimize the loss function in machine learning models.

Explaining to a kid: Imagine you’re trying to find the lowest point in a valley while blindfolded. You take small steps downhill based on the slope you feel under your feet until you reach the bottom.

16. Can you explain the difference between regression and classification?

Answer:

- Regression: Predicts a continuous output or numbers like 50, 50.4, etc (e.g., temperature).

- Classification: Assigns inputs to discrete categories like animal with different category (e.g., cat or dog).

17. Explain a clustering algorithm of your choice.

Answer: K-means clustering is a popular algorithm.

It partitions data into K clusters by minimizing the distance between data points and their corresponding cluster centroids.

18. How can you explain ML, DL, NLP, Computer Vision, and Reinforcement Learning with examples in your own terms?

Answer:

- Machine Learning (ML): Teaching computers to learn from data, like predicting house prices.

- Deep Learning (DL): A subset of ML using neural networks with many layers, like facial recognition.

- Natural Language Processing (NLP): Understanding and generating human language, like chatbots.

- Computer Vision: Teaching computers to interpret visual information, like object detection in images.

- Reinforcement Learning (RL): Learning by trial and error to maximize rewards, like a robot learning to walk.

19. How can you explain semi-supervised ML in your own way with an example?

Answer: Semi-supervised learning uses a small amount of labeled data and a large amount of unlabeled data.

For example, teaching a model to classify emails as spam or not spam using a few labeled emails and a lot of unlabeled ones.

20. What is the difference between abstraction and generalization in your own words?

Answer:

- Abstraction: Simplifying complex systems by focusing on essential details.

- Generalization: Ensuring a model performs well on new, unseen data, not just the training data.

Related Article: What is a Supervised Learning? – Detail Explained

21. What are the steps that you followed in your last project to prepare the dataset?

Answer: Steps included:

- Data Collection: Gathering raw data.

- Data Cleaning: Handling missing values, removing duplicates.

- Data Transformation: Normalizing, encoding categorical features.

- Feature Selection: Choosing relevant features for the model.

- Splitting Data: Dividing into training and testing sets.

22. In your last project, what steps were involved in the model selection procedure?

Answer:

- Define Problem: Understanding the task and requirements.

- Initial Modeling: Trying different algorithms.

- Evaluation: Using metrics like accuracy, precision, recall.

- Hyperparameter Tuning: Optimizing model parameters.

- Cross-Validation: Ensuring stability and generalization of the model.

23. If I give you two columns of any dataset, what steps will be involved to check the relationship between those two columns?

Answer:

- Exploratory Data Analysis (EDA): Visualizing data using plots like scatter plots.

- Statistical Tests: Calculating correlation coefficients.

- Hypothesis Testing: Checking for statistical significance.

24. Can you please explain at least five different strategies to handle missing values in a dataset?

Answer:

- Removal: Deleting rows or columns with missing values.

- Imputation: Filling missing values with mean, median, or mode.

- Predictive Imputation: Using ML models to predict missing values.

- Forward/Backward Fill: Using previous or next value to fill gaps.

- Using Algorithms that Handle Missing Data: Like decision trees.

25. What kind of different issues have you faced with raw data? Mention at least five issues.

Answer:

- Missing Values: Gaps in the dataset.

- Noisy Data: Irrelevant or random data.

- Inconsistent Data: Conflicting data entries.

- Duplicate Data: Repeated entries.

- Imbalanced Data: Disproportionate class distribution.

26. What is your strategy to handle categorical datasets? Explain with an example.

Answer: Strategies include:

- Label Encoding: Converting categories to numbers (e.g., “red” to 1, “blue” to 2).

- One-Hot Encoding: Creating binary columns for each category (e.g., “red” -> [1, 0], “blue” -> [0, 1]).

- Example: For a “color” feature with values [“red”, “blue”], one-hot encoding would create two columns: “color_red” and “color_blue”.

27. How do you define a model in terms of machine learning or in your own words?

Answer: A model in machine learning is a mathematical representation or algorithm that learns patterns from data and makes predictions or decisions based on new inputs.

These questions and answers should give a good overview of fundamental machine learning concepts, presented in a clear and relatable way.

28. What do you understand by k-fold validation and in what situation have you used k-fold cross-validation?

Answer: K-fold validation is a technique used to evaluate the performance of a model. The dataset is divided into k equal parts (or folds).

The model is trained k times, each time using k-1 folds for training and the remaining one fold for testing.

This process helps ensure that every data point gets to be in the test set exactly once. I’ve used k-fold cross-validation in situations where I needed a more reliable estimate of model performance, like when tuning hyperparameters to avoid overfitting.

29. What is the meaning of bootstrap sampling? Explain in your own words.

Answer: Bootstrap sampling is a method where we create multiple datasets by randomly sampling with replacement from the original dataset.

Each new dataset is the same size as the original. This technique is often used to estimate the accuracy of a model or to measure the variability of an estimate.

It’s like picking marbles from a bag, putting each one back after you pick it so it could be picked again.

30. What do you understand by underfitting and overfitting of a model with examples?

Answer:

- Underfitting: This happens when a model is too simple to capture the underlying patterns in the data. For example, using a linear model to predict a complex, non-linear trend would lead to underfitting, resulting in poor performance on both training and test data.

- Overfitting: This occurs when a model is too complex and captures not just the underlying patterns but also the noise in the training data. For example, a decision tree with too many branches might perform very well on training data but poorly on new data because it has learned the noise rather than the signal.

31. What is the difference between cross-validation and bootstrapping?

Answer:

- Cross-Validation: This involves splitting the data into k subsets (folds) and using each fold as a test set while training on the remaining folds. It’s used to get a reliable estimate of model performance.

- Bootstrapping: This involves creating multiple new datasets by sampling with replacement from the original dataset. It’s often used to estimate the distribution of a statistic (like the mean or standard deviation) or to assess model stability.

32. What do you understand by the silhouette coefficient?

Answer: The silhouette coefficient is a measure of how similar an object is to its own cluster compared to other clusters. It ranges from -1 to 1:

- A value close to 1 means the object is well matched to its own cluster and poorly matched to neighboring clusters.

- A value close to 0 means the object is on or very close to the decision boundary between two neighboring clusters.

- A value close to -1 means the object might have been assigned to the wrong cluster.

It’s used to assess the quality of clustering results.

33. What is the advantage of using the ROC Score?

Answer: The ROC (Receiver Operating Characteristic) score, often referred to as the AUC (Area Under the Curve), measures the ability of a classifier to distinguish between classes. The advantages are:

- Performance Measurement: It provides a single metric to evaluate the performance of a binary classifier across all possible classification thresholds.

- Threshold Independence: Unlike accuracy, which depends on a specific threshold, the ROC score summarizes performance across all thresholds.

- Comparative Metric: It’s useful for comparing different models, as it considers both true positive and false positive rates.

Using the ROC score helps in selecting the best model for classification tasks, especially when dealing with imbalanced datasets.

Conclusion

These machine learning interview questions provide a comprehensive overview of key concepts in machine learning, ranging from fundamental definitions and techniques to more advanced topics and practical applications.

Understanding these concepts is crucial for anyone looking to excel in the field of machine learning.

By grasping the basics of machine learning algorithms, validation methods, and performance metrics, you can effectively tackle real-world problems and build robust models and this short machine learning interview questions helps in to understand this concepts.

Techniques like k-fold cross-validation and bootstrapping ensure that your models generalize well, while understanding concepts like underfitting, overfitting, and the ROC score helps in fine-tuning and evaluating model performance.

Whether you’re preparing for an machine learning interview or seeking to deepen your knowledge, mastering these topics and questions will equip you with the skills needed to succeed in the dynamic and evolving landscape of machine learning. Keep exploring, experimenting, and learning to stay ahead in this exciting field.

Related Article: What are the Types of Machine Learning? – in Detail

Meet Nitin, a seasoned professional in the field of data engineering. With a Post Graduation in Data Science and Analytics, Nitin is a key contributor to the healthcare sector, specializing in data analysis, machine learning, AI, blockchain, and various data-related tools and technologies. As the Co-founder and editor of analyticslearn.com, Nitin brings a wealth of knowledge and experience to the realm of analytics. Join us in exploring the exciting intersection of healthcare and data science with Nitin as your guide.