This article highlights the concept of the Bias-Variance tradeoff by discussing how to handle bias and variance while using Machine learning.

There is an inherent trade-off between bias and variance when creating prediction models.

There are simple models which can easily be understood and used for modeling, but they suffer from high bias.

On the other hand, there are complex models which are more difficult to understand, but they have low bias.

The bias-variance tradeoff is a key concept in the theory of statistical learning that is used for tuning parameters for machine learning algorithms.

In machine learning, different types of errors have been spotted through which the performance of a prediction can be evaluated.

A total of two types of errors exist in machine learning, that is the error that is involved in making flawed predictions.

Two of these errors are Bias and Variance.

Bias can be defined as an average difference between the actual value and the predicted value.

Higher bias results in a lower accuracy while lower bias results in higher accuracy.

What is the Bias Variance Tradeoff?

While making predictions, a Machine learning algorithm needs to choose the correct set of parameters for a given prediction.

For example, we have a class A prediction model that predicts a student’s grade on a certain percentage.

The prediction is based on the percentage of A, B, and C students in a given class. For example, 90% A, 10% B, and so on.

Class B prediction model shows the predicted grade for a student based on their subject, i.e., A = 91, B = 88, and so on.

As mentioned before, there is a lot of variation in grading, for example, if a student of a certain class gets 90% in Math and 10% in Physics, then the grade for them in Physics might be an A+. This kind of variation reduces accuracy.

How to Handle bias-variance tradeoff in machine learning?

If the overall accuracy of a system is under the threshold of accuracy, then it is required to correct Bias and Variance errors.

Comparing Accuracy- Error rate by Decoding accuracy metric can be a good idea. This gives us an idea of how accurate a model is and how prone it is to under-or over-estimating/ overestimating the value for some observations.

The average accuracy of prediction is highly correlated with model difficulty, hence there is an over-arching tradeoff in using intermediate models in initial settings for predicting binary outcomes.

As the data gets available more complex features can be incorporated into the predictions as compared to the initial models.

Here are some of the techniques used to handle the bias and variance in Machine learning systems

How to Handle Bias?

Bias is defined as the difference between the actual value and the predicted one by a specific model. A high bias means that a model under its the data.

The more complex a model is, it introduces a bias-variance tradeoff which results in overfitting of data as well as underfitting of data in the machine learning model.

The variance on the other hand can be defined as the average deviation between the predicted value and the actual value.

It can be higher or lower than the bias. Thus by selecting the right bias we will select the right variance.

Here is a step-by-step guide to identifying the right bias and variance for a machine learning model.

How much variance you should tolerate completely depends on the type of data used. Given a dataset of 100 samples, 20 x data points are assumed as predictors and 40 samples are assumed to be non-predictors.

Tack on an error term of .5 for the total sum of the errors of the model and we arrive at the total predicted value, formula_1.

Determine the minimum variance you need for your model and set your bias to give you less than .5.

How to Handle Variance?

Variance is the average error in predictability, which increases as the number of parameters increases.

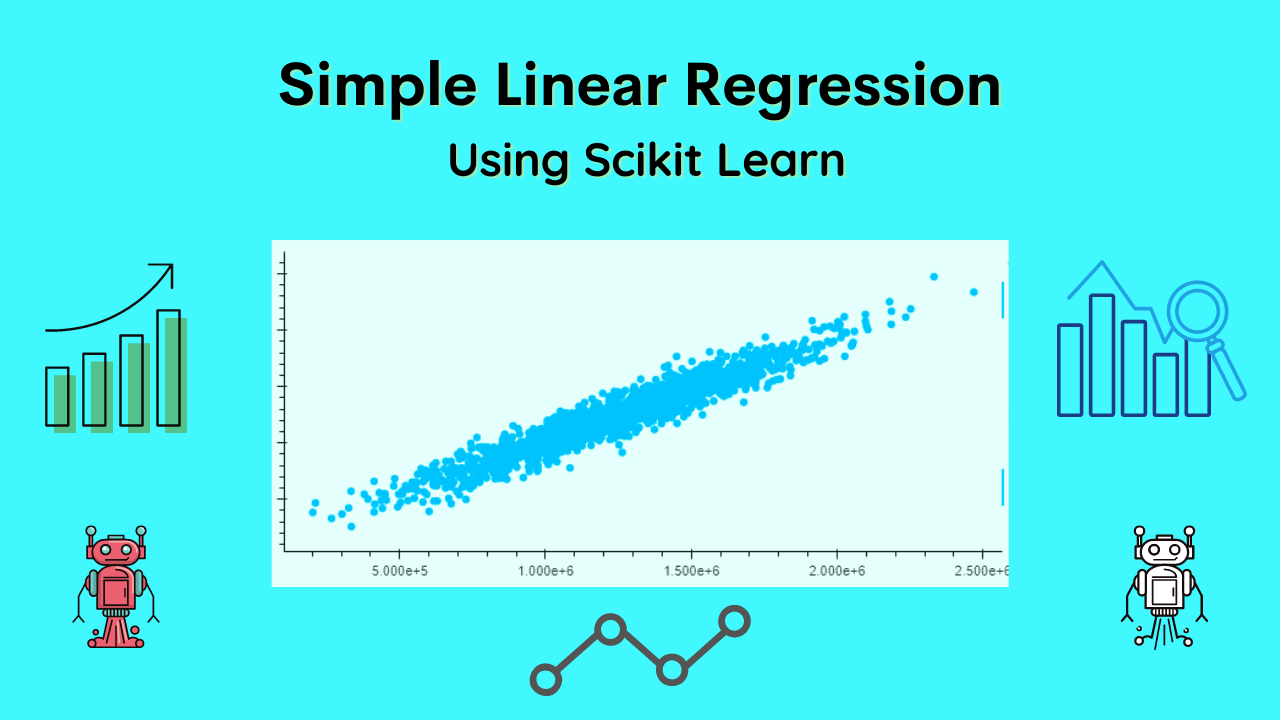

In the following two images, you can see the categories for bias and variance. As the number of parameters increases, the error increases with a positive relationship.

Consider a random variable X, which we predict to have a certain value. We call that the probability.

We make predictions with X against all other variables of the same type, but with a constant probability, by multiplying it by all variables, and getting the ‘Predictability’ .

If you add this predictability with a single variable and predict against all the other variables, you are going to get this prediction.

Why Too Much Variance?

Variance on the other hand is the variance in the output of the model. This error occurs because of two situations, which are related to bias and bias-variance.

There is an error in the input which has been fed to the model. This error varies in magnitude across different examples.

The loss associated with this error is Bias or variance as the deviation of the input value from the predicted value, which is the base of the estimation of the accuracy in machine learning.

Why Low Variance?

Variance, on the other hand, is the number of errors in the prediction that are calculated for a specific output value.

The best example of Variance can be seen in the multimedia files like Two examples of variance can be found in images and words.

On average, you expect a high variance in images. Thus, image data can be corrupted by the distortion of the human eye when taking the picture.

That the reason there should be low variance is required in the images to avoid data corruption or vise versa.

Why Too Little Variance?

The variance is often associated with high-level concepts. For example, variance refers to the amount of uncertainty involved in any prediction.

Variance is associated with the inaccuracy of a prediction. This results in an algorithm with a low rate of accuracy and also an algorithm with a high rate of over-fitting.

As an example, on the face of it, the decision tree can look like a straight line. However, given that there are 2 variables, X and Y, and a pattern is predicted, there are multiple routes to reach the target.

Two of these routes are:

1. Redirect the decision from node A to node C,

2. Redirect the decision from node A to node B

Also, a graph with a high load of nodes will have a higher bias. This is because a choice from one of the possible routes has a lower risk.

What is Overfitting?

When an algorithm is over-fitted with a model. More exacting is called overfitting and its occurrence is expected to decrease as training time increases.

Classical Data Set: This is the gold standard of data in Machine Learning. This is because it represents the complete, real-world situations from which a prediction model is being trained.

Bayes: Bayesian is a mathematical formula that has been used in Machine Learning to model complex data, in addition to the theoretical background.

Generalization: The end product of supervised learning, describes the prediction that we get after training the algorithm.

Original Data: This is the data set for which a prediction model is being trained.

What is Underfitting?

When we are training an estimator, if the values predicted by it turn out to be biased, then we know for sure that the estimator will underfit the data.

The feature space representation and the hypothesis function of the estimator become inappropriate for capturing all the complexity present in the problem.

In computer vision, underfitting is the process of a model missing the target attribute. With normalization, a model will produce output that’s closer to the final objective and will also reproduce the observations better.

When the fit is not good enough, the model will produce a prediction that is incorrect.

Underfitting will lead to results like the moon not being visible or even finding a planet in the atmosphere.

Selective Parameter Overfitting is another form of underfitting, which arises when a system deviates from its best-fit settings.

It takes place when the system is not optimized for a certain area of the data, such as areas that are known to be rich in an indicator, such as “Atherosclerosis”, “Children” “Alzheimer’s”, “Arthritis” etc.

Conclusion

When it comes to machine learning, the most important question is how to handle a tradeoff between bias-variance.

Bias is a term used to refer to the consistency of your estimation or model prediction and variance refers to the model’s sensitivity with respect to changes in data and input features.

The term bias appears because in order to avoid having high variance also known as overfitting you have to minimize the errors of your model by decreasing its sensitivity towards other parameters. But this can result in underfitting which is referred to as high bias.

Recommended Articles:

How To Do Data Normalization For Machine Learning?

What Is A Statistical Model? | Statistical Learning Process.

Top AI Project Ideas for Covid-19

What Is A Bias Variance Trade-Off?

How to boost remote working productivity Using AI?

DataScience Team is a group of Data Scientists working as IT professionals who add value to analayticslearn.com as an Author. This team is a group of good technical writers who writes on several types of data science tools and technology to build a more skillful community for learners.