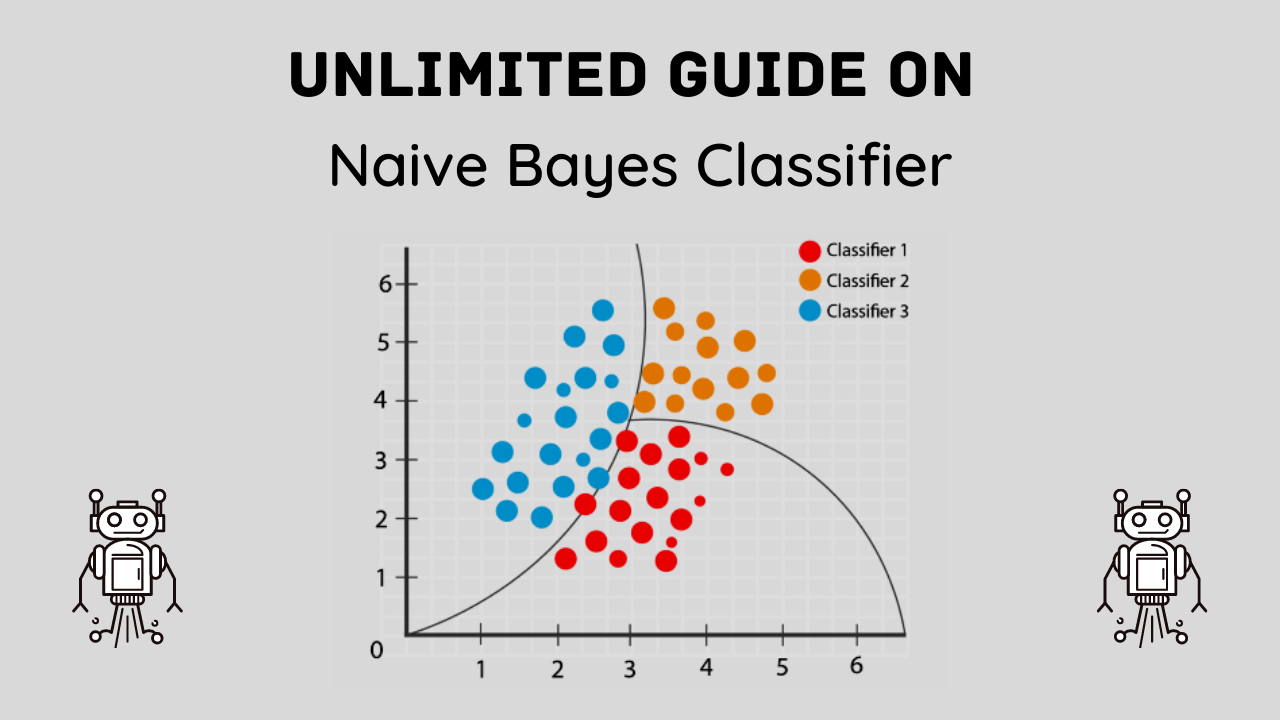

In this blog post, You are going to learn Naive Bayes Classifier in detail with different usabilities and applications in machine learning.

The Naïve Bayes Classifier is a supervised machine learning algorithm based on Bayes’ theorem.

The Naïve Bayes Classifier has many variants, among them the Multinomial Naïve Bayes, the Bernoulli Naïve Bayes, the Dirichlet Naïve Bayes and the Gaussian Naïve Bayes Model.

What Is the Naïve Bayes Classifier?

The Naïve Bayes Classifier is a probabilistic classifier based on applying Bayes’ theorem with strong (naïve) independence assumptions between every pair of features.

In other words, it assumes that there is no interaction or correlation between any two features.

It was developed in 1965 by Harold Jeffreys and Richard Whittle and has found numerous applications in different areas such as information retrieval, machine learning, text mining, and computer vision.

Other types of classifiers include logistic regression and support vector machines (SVMs).

SVMs have often been touted as one of the most accurate classification models, but they are also more complex than other models like naïve Bayes.

Conversely, naïve Bayes is computationally cheap, simple to implement, and can be easily parallelized.

They are less sensitive to dimensionality reduction and can still work well even if you do not use all training examples for building an SVM.

Related Article: Ultimate Guide on Random Forests: What is it?

How Does the Naïve Bayes Algorithm Work?

The algorithm uses a training set of data and applies Bayes’ theorem to compute parameters, in order to predict unseen data.

This section is all about how naïve-Bayes works with large datasets and sparse matrices, like pre-processed text data which creates thousands of vectors depending on words in a dictionary.

It also discusses how naïve-Bayes classifiers are naïve in that they assume conditional independence among features (i.e., words).

Although such independence rarely exists, treating it as such makes things much easier to solve by reducing our dimensionality from O(n2) to O(nlogn), where n is our number of observations and l is our number of features.

Related Article: Principal Component Analysis by Sklearn – Linear Dimensionality Reduction

Advantage and Disadvantage of Naive Bayes classifier

Advantages

The main advantage of Naive Bayes over other classification techniques is that it doesn’t need any tuning parameters and can work very well even when there are a lot more negative instances than positive ones.

In addition, unlike SVMs, Naive Bayes classifiers perform well on datasets containing null values.

Disadvantages

The disadvantage of Naive Bayes is that it assumes everything in each feature’s distribution is independent, which may not be true if you have multivariate data.

However, if your data does fit into Naive Bayes’ assumption, then you’ll often see better performance with it VS Some other algorithms are out there like SVM or logistic regression.

How To Optimize Our Model or Predictions?

In order to create an optimized Naive Bayes Classifier, we need a way of figuring out how well our predictions are doing.

There are different fundamental metrics that we can measure to determine how well our algorithm is performing, precision, recall, and AUC.

Precision: Precision measures how many actual positive outcomes were correctly predicted in relation to all instances that have been labeled as positive.

For example, if we had a total of 1000 tweets classified, let’s say with 100 being positive examples and 900 being negative ones, we would expect precision of 90%.

Recall: Recall measures how many actual positive outcomes were correctly predicted in relation to all instances that have been labeled as such. Ideally, you’d want your classifier to have both high precision and high recall.

The same applies to recall, A good score would be an equal number of correct positive and negative predictions.

AUC or area under the ROC curve is also another important metric for evaluating machine learning models.

AUC or area under ROC curve measures sensitivity (True Positive Rate) against 1-specificity (False Positive Rate) to calculate overall model performance on unseen test data points.

We can use any of these metrics to select an optimal model that performs well with our dataset, while still having a certain level of generalization.

How to Know the Naïve Bayes classifier Model Is Good?

The Naïve Bayes classifier is a supervised machine learning algorithm that can be used for predictive classification problems.

It is based on Bayes’ theorem, which allows us to calculate conditional probabilities between two events.

When we want to make predictions about classes, we must assign prior probabilities, which are usually unknown and must be estimated using statistical methods.

Related Article: What is a Supervised Learning? – Detail Explained

How to validate Naïve Bayes Classifier?

A). One way of evaluating models is by testing them against samples (the ‘hold-out’ method).

For example, you could use only 10% of your entire dataset to test whether your model works well with random data.

This would help you understand how well your model generalizes basically whether or not it’s useful in new situations other than those in which it was trained.

B). Another approach is called cross-validation, You divide your entire dataset into multiple subsets: three, four, or five should be enough.

Then, you train your model several times on each subset and then measure its performance over these different training sets as an average measure of how well it will perform when deployed on future data.

This method helps improve our understanding of how sensitive our model is towards differences in parameters and preprocessing choices.

C). A third strategy, also known as k-fold cross-validation or bootstrapping, consists in splitting your data sample into k equal parts. We may train our model on one part only, leaving aside all others; repeat until all folds have been used.

When Can I Use the Naïve Bayes Algorithm?

Naïve Bayes Algorithm can be applied in text classification problems when we don’t have enough labeled data.

Also, Naïve Bayes Algorithm works best on sparse matrix data which creates thousands of vectors depending on words in a dictionary.

If we want to classify our word based on some knowledge base like sentiment analysis, spam detection, opinion mining, and so on, then it can be used.

In fact, it is being used in social media sites like Facebook for detecting whether there is abusive or offensive content.

The success rate of the Naive Bayes algorithm is more than other algorithms such as Logistic Regression or K-Nearest Neighbors (KNN).

Although Naive Bayes classifiers are not able to consider any kind of interactions between attributes its success rate compared with other algorithms makes it a favorite among most machine learning engineers.

It is widely available as an open-source implementation in various programming languages such as Java, Python, etc., through online sources like GitHub or Bitbucket.

It can also be easily integrated into any Java program by downloading third-party APIs from places like Google Code Libraries etc.

Are There Drawbacks to Using This Algorithm?

Like any algorithm, there are drawbacks and strengths associated with using a Naïve Bayes classifier from scratch.

The main drawback is that you will have to tweak your parameters for each individual application.

If you don’t do it properly, you might not get high accuracy when classifying text data.

The reason behind it is that Naïve Bayes works with conditional probabilities, which are highly dependent on a given dataset.

Even simple changes in your datasets can affect precision because of low training set sizes.

In order to avoid such situations, it would be best if you used an off-the-shelf machine learning library as a framework.

Doing so allows you to test how good its pre-set values are and then use those values (which means less tweaking), Another point worth mentioning here is parallelism.

The naive Bayes model cannot be parallelized efficiently due to sharing resources between iterations.

So, even though current CPUs support multi-core computing well, working on multiple CPU cores isn’t likely going to yield anything substantial.

Implementation of Naïve Bayes Classifier in R

Now we are going to implements the simple Naïve Bayes Classifier in R programming using the Hr Analytics Data to find the predictive output of different Attrition of HR

You can get the Hr Analytics Data from here – Download

Import the required libraries

library(e1071) library(dplyr)

Read the Hr Analytics Data for Modeling

hr = read.csv("HR Analytics.csv")

Convert the target Variable data into factor format

hr$Attrition = as.factor(hr$Attrition) set.seed(100)

Sample the data in train and test form for building and testing the model

hr_train = hr[sample(seq(1,nrow(hr)),(0.7*nrow(hr))),] hr_test = hr[sample(seq(1,nrow(hr)),(0.3*nrow(hr))),]

Finding the number of Attrition based on Mail and Femail

hr_train %>% filter(Attrition == 0, X == 'Female') %>% nrow() hr_train %>% filter(Attrition == 0, X == 'Male') %>% nrow() hr_train %>% filter(Attrition == 0) %>% nrow() unique(hr$JobRole)

Build the model and predict the output

model_nb = naiveBayes(Attrition~JobRole, data = hr_train) predicted = predict(model_nb, hr_test,type = 'raw') hr_test[12, "JobRole"]

How to Learn More About Naïve Bayes Models?

So you want to learn more about Naive Bayes models, huh? There are many resources out there on how they work and how they can be applied.

- Refer Different AI and machine learning books and White paper- (E.g. Artificial Intelligence: A Modern Approach)

- Read Books like: Machine Learning for Hackers or Machine Learning in Action or The Elements of Statistical Learning

- There are also a few MOOCs or Massive Open Online Courses, you can enroll in on how they work. This is an especially great resource if you want to learn more about them but don’t have time to read entire books on the subject.

- UC Berkeley Principles of Data Science (CS188) Machine Learning at Columbia University (CS229) Udacity.

- Learn More About Kaggle Competitions?: Kaggle is a website where data scientists from all over come together from every field imaginable to tackle big problems and get recognized for their achievements.

Conclusion

Text classification with a Naive Bayes classifier is not a difficult task, we can easily implement it from scratch.

However, it’s very important to choose appropriate features for our machine learning problem and understand how features work.

Next time you face a text classification problem, don’t forget about Naive Bayes! It’s fast, robust and out of memory-consuming compared to other algorithms such as SVM or others.

The theory behind naïve Bayes may look tricky but in fact, everything is logical when broken down into simple steps.

This post was intended to be introductory so that those who have never heard of Naive Bayes could give it a try.

DataScience Team is a group of Data Scientists working as IT professionals who add value to analayticslearn.com as an Author. This team is a group of good technical writers who writes on several types of data science tools and technology to build a more skillful community for learners.