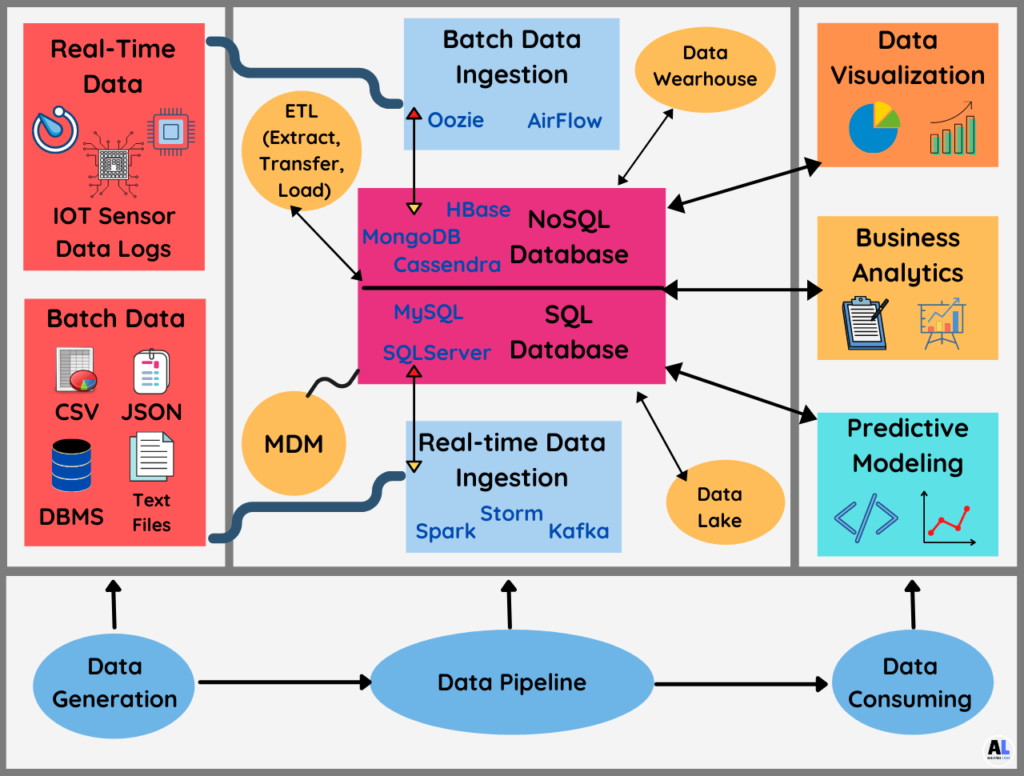

The data pipeline is the flow of data that goes from one end to another end which means raw data to the solution part flows continuously.

Another discretion would be, it is the process where we transfer the data from one point to another point with some intermediate steps and processing techniques.

Core Sections in Data Pipeline

1. Data Generation (Raw Data)

It is the steps that come before the start of the data pipeline or we can say that this is the starting point of data workflow.

In this step there are several types of data sources or data generation components that can include, would be batch data generation or real-time data generation.

2. Data pipeline (Workflow)

It is the intermediate step where the complete part of data workflow and processing comes to that process and prepares the raw data and sends it to the last stage.

3. Data Consuming (Insights)

The end of data is the data consuming part where usage of data comes in to picture that includes data modeling, data visualization, or business analytics processes are performed because they need well-prepared data.

Multiple Steps in data pipeline:

- Data Cleaning

- Data Processing

- Data Governance

- Data Enriching

- Data Monitoring

Different types of Data Pipelines

- Realtime Data

- Batch Data

- Lambda or Hybrid Architecture

Multiple components in Data Pipeline

1. Batch files data sources

There is an enormous amount of data sources are available for batch data generation and storage can be in structure form or unstructured form.

The batch file can be CSV, JSON, text, SQL or NoSQL files, and other multimedia files which get created batch-wise continuously and it is get ingested into the data pipeline using batch processing tools.

2. Real-time data sources

The huge amount of sensors, digital devices, robots, and software devices can generate real-time data based on timestamp (sec, min, hour) and it creates continuously that mostly in the unstructured form get ingest to data workflow using streaming tools.

3. Dataflow (ETL)

It is a well-known and massively uses a process for Big data analytics which contains extraction, transfer, and loading of data (extract, transfer, load).

ETL is the subset and the small part of the Data pipeline which most certainly use to do the extraction and processing of past data on data Wearhouse.

4. Master Data Management (MDM)

MDM process called master data management helps to build one signal master reference source for all crucial business data that leads to less redundancy of data without errors in the business process.

5. Data Lake

It is the data container where a large amount of structured, semi-structured, and unstructured data is available without any label and format.

It stores the batch data files or Real-time data that mostly in the raw form which is not processes but you can access that as per your need.

Google big Query is an example to work on Data Lake for data processing to get the expected output from raw data.

6. Data Wearhouse

It is a large container of transactional and historical data which is mostly in a structured form that you can easily access for analysis.

7. Analytics (Modeling)

It is the final part of the data pipeline where we can develop the predictive models on well-cleaned and processed information to get the predictive future outcomes.

8. Visualization

Data visualization is the final step where you can statistically see how the data perform or the part transaction movements.

It is a very crucial and useful process to get the perfect insight from data to increase the chances of the right decisions about the business.

Setup Options for Data Pipelines

Data Pipeline can set up at the On-premises or on cloud services both the ways have different advantages and disadvantages.

1. Cloud data pipeline:

This approach is very well known and rapidly increasing in the industry because of advanced cloud systems and software suites without any huge infrastructure or physical hardware.

Similarly, you can use a large amount of storage as you want on the cloud platform by paying the minimal amount to the provider for storage space and for execution speed.

On the other hand, you can integrate several working tools and technologies into the cloud pipeline based on your project. Microsoft Azure is the best example of cloud-based data pipeline service.

2. On-premises data pipeline:

To set up the on-premises data pipeline, you need to purchase and deploy multiple software and hardware with your private data center system.

On-premises data pipeline system can give you full control and lots of customization options to make dataflow and work very efficient but this process consumes more cost.

Similarly, you need to manage and secure your data center yourself, like data backup and recovery, different up-gradation and Updates in software, and hardware management.

Conclusion

As to conclude, a data pipeline is the workflow of data from the raw stage to the complete processed data or expected analyzed results.

It is the combination of several data sources and data processing tools or technologies to make business processes efficient.

In the end, it has several benefits for data engineers to work efficiently and make the data execution process more understandable to non-technical people or senior management.

Recommended Articles:

Data Warehouse Concepts In Modern World.

What Is The Architecture Of Hadoop? – In Detail Explained

Apache Spark Architecture Detail Explained.

Meet Nitin, a seasoned professional in the field of data engineering. With a Post Graduation in Data Science and Analytics, Nitin is a key contributor to the healthcare sector, specializing in data analysis, machine learning, AI, blockchain, and various data-related tools and technologies. As the Co-founder and editor of analyticslearn.com, Nitin brings a wealth of knowledge and experience to the realm of analytics. Join us in exploring the exciting intersection of healthcare and data science with Nitin as your guide.