In this guide, we will look at autoencoders, how they work, and what use cases they can be applied to Neural Networks.

Autoencoders are important tools in the field of machine learning, providing an easy way to generate new representations of data that can be applied to other analysis techniques or used to reconstruct original data from its encoded form.

We’ll cover how autoencoders work and why they’re useful in this introductory guide to neural networks, as well as how you can use them to make sense of your own data.

An Autoencoder is an artificial neural network designed to learn a representation of data in order to compress it into fewer bits.

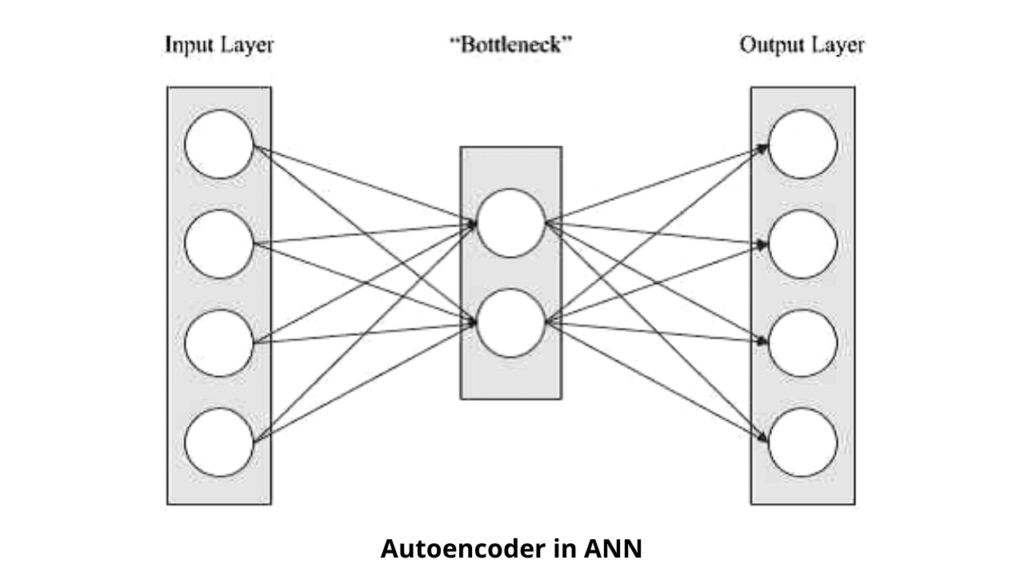

An autoencoder consists of an encoder and decoder.

The encoder learns how to represent the input data in fewer bits and the decoder learns how to reconstruct the original data from the encoded version provided by the encoder.

What is Autoencoders?

Autoencoders are a type of artificial neural network used for unsupervised learning. It is a supervised neural network in which there is only one output.

In other words, they encode and compress information but don’t interpret it. They take input and reduce it into an encoded version that when provided with more input will reconstruct what was originally provided as input.

They can be trained with both labeled data (one-hot encoding) and unlabeled data (their default state).

Their applications span across domains such as feature extraction/dimensionality reduction, dimensionality expansion for embedding manifold learning algorithms, etc.

What are Neural Networks?

A neural network is a set of artificial neurons that can be trained to represent any input and output relations.

A neural network consists of a large number of artificial neurons, connected with each other.

Each neuron is associated with an activation function, that takes as input all values in a previous layer and calculates its own output value based on it.

The calculated value is then used as an input for all subsequent layers. If you imagine stacking all these layers up, you get something called Deep Learning.

The most common type of activation function is sigmoid or tanh functions, which are similar to linear functions if you restrict them to lie between 0 and 1 range.

Other types of activation functions exist (such as ReLU), but they are less common due to their limitations which we will discuss later.

Related Post: What is an Artificial Neural Network (ANN)?

Why we need Autoencoders in Neural? Networks?

Autoencoders neural networks efficiently compress and encode data and then reconstruct the data back from the reduced encoded representation to a representation that is as close to the original input as possible.

They are used in image compression, handwriting recognition, and self-driving cars just to name a few uses of them.

They’re also sometimes referred to as Unsupervised Neural Networks and Restricted Boltzmann Machines.

Autoencoders are an unsupervised neural network used for dimensionality reduction.

A practical example of an autoencoder is Image Autoencoder which encodes an image into a vector and then tries to reconstruct the original image from encoded representation.

Autoencoder is often used as an intermediate step in unsupervised neural networks.

It is also very useful in Data Science and Machine Learning for a variety of problems including anomaly detection, feature extraction, dimensionality reduction, etc.

How do Autoencoders Work?

Autoencoders can be used for a variety of purposes such as dimensionality reduction, probabilistic modeling or simply feature extraction.

Autoencoder models are typically comprised of Three main sections, known as layers.

The first layer is usually referred to as an input layer and captures your input variable(s).

The second layer is called a bottleneck layer; these layers comprise what is commonly referred to as the encoder part of your autoencoder model.

Lastly, there is a hidden layer that helps bridge the output created by your encoder network to its decoder network (i.e., output reconstructed from encoded input via deconvolutional layers), known as a decoder network.

Deconvolutional layers help in minimizing reconstruction error between your actual output and output produced by your autoencoder network’s bottleneck layer.

A few popular choices for learning algorithms include Stochastic Gradient Descent, Momentum Optimization, and Restricted Boltzmann Machines (RBMs).

We won’t go into detail on exactly how these algorithms work here but we will discuss them briefly below.

Why Autoencoders are Useful?

Autoencoders, often also called functional or denoising neural networks, are a type of artificial neural network designed to learn mappings from inputs x ∈ Rd to outputs y ∈ Rd with some noise added.

They are useful because they can be used as a proxy for how well different dimensions in x map onto y.

That is, they can help us detect redundancy (or lack thereof) in data (or our features).

Often times it’s pretty easy to detect when one dimension is irrelevant but hard to determine if there’s something redundant about two dimensions.

Removing irrelevant features is important when using neural networks and so autoencoders are an important tool to have at your disposal.

Can I Train my Own Autoencoder?

First, let’s make sure that you know what an autoencoder is, Basically, it’s a model that allows you to compress information in one step for efficient storage or transmission and decompress it later (almost) without loss of quality.

We don’t need much more than just our input-encoded-output blocks to create our own classifier using an autoencoder architecture. Let’s go over each part in detail.

In order to train our algorithm, we will use scikit-learn and matplotlib; some familiarity with these packages will be helpful but not required.

When working with scikit-learn we first need to import all packages we plan on using for training, modeling, prediction, and visualization.

Additionally, after importing them we have to initialize them all so that they have their respective configurations available within the sklearn environment.

For example, if I wanted to utilize NumPy and pandas within my code I would include:

import numpy as np import pandas as pd

Before beginning work on your classification model I would recommend reading a bit about ensembles, random forests, support vector machines, decision trees, and boosting algorithms; familiarizing yourself with them will better enable you to understand what exactly goes into building various classification models.

On top of that, familiarize yourself with sklearn APIs if you don’t already know it well; many of these (and other) classification algorithms are available within its API.

Benefits of Autoencoders in Neural Networks

Autoencoders are used in many applications like computer vision and natural language processing.

An autoencoder neural network is a type of artificial neural network that is used for unsupervised learning.

It learns how to reconstruct data from a compressed representation and then encoded it back into a format that’s close to its original state.

The structure of an autoencoder looks very similar to a regular feed-forward neural network but with two important differences.

First, there are no hidden layers and second, there is no output layer. Another difference between feed-forward networks and autoencoders is that in feedforward networks, input x goes through weights w(1), w(2), . . . , w(L) and then through activation function f(x) to produce y.

In an autoencoder, instead of using y as input for another layer, we will use x as input.

Weights of all our hidden layers (if any) will become equal to 1 or 0.

Conclusion

Autoencoders (AE) are neural networks that can be used as a feature extraction component of a Deep Learning model.

An AE learns how to efficiently compress and encode data and then, using its internal latent representation (encoded vector), learns how to reconstruct the original input vector as close as possible.

Meet Nitin, a seasoned professional in the field of data engineering. With a Post Graduation in Data Science and Analytics, Nitin is a key contributor to the healthcare sector, specializing in data analysis, machine learning, AI, blockchain, and various data-related tools and technologies. As the Co-founder and editor of analyticslearn.com, Nitin brings a wealth of knowledge and experience to the realm of analytics. Join us in exploring the exciting intersection of healthcare and data science with Nitin as your guide.