In this blog post, we are going to explore Singular value decomposition (SVD) is a great technique for analyzing data, and how it is applied in a variety of scenarios.

We will also discuss what the SVD of a matrix actually means and how to compute it when you have it.

Singular value decomposition (SVD) is a math operation you can perform on matrices that are just as important as, say, solving for the determinant in some instances.

SVD breaks down a large data set that has been packed into a smaller matrix, making it helpful for data processing and analysis.

Singular value decomposition is one of the most important factorizations for understanding and applying linear algebra.

What is Singular Value Decomposition?

SVD is a factorization of a matrix into three matrices, The resulting matrices are called, respectively, an upper triangular matrix, which has 1s on its diagonal;

A unitary matrix, which has 0s everywhere else; and

A diagonal matrix, whose entries are singular values or weights.

These three factors can be interchanged to produce new sets of data or results.

Example 1: if you multiply two matrices together (according to certain rules), you can re-produce their inverse via SVD thereby taking back numbers close to zero in magnitude (hence singular).

Example 2: If you multiply together a vector with a matrix that has been scaled by its corresponding singular value (also known as taking dot products), you’re left with one coefficient of that dot product that tells you how closely your input vector aligns with each particular axis in your matrix.

The factorization of a matrix \(A\) given by \(S\), \(V\), and \(U\) (call them SVD for short) separates the matrix into three pieces.

This allows you to understand the structure of the data much better.

SVD is a factorization of a given matrix \(A\), which is of the form \(A = U S V^T\) for \(U\) and \(V\) unitary matrices, where \(S\) is a diagonal matrix.

The notation can get a bit confusing, so just remember that the item in boldface is the product of the remaining entries in parentheses. I’ll say it specifically: \(S(u)_{ii} = u_i^{2},\; j

Singular Value Decomposition And Matrix Factorization

The Singular Value Decomposition of a matrix is a factorization of that matrix into three matrices, known as left singular vectors, right singular vectors, and singular values.

This decomposition can be used to study both how linear transformations behave on data points in high dimensions, and how linear transformations can be approximated.

In addition to its theoretical uses, it has some very important applications in data science.

For example, Singular Value Decomposition is used to reduce both low-rank approximation and non-negative matrix factorization problems into linear algebra problems that can be solved efficiently.

A factorization of a matrix into three matrices, having interesting algebraic properties and conveying important geometrical and theoretical insights about linear transformations.

SVD has some important applications in data science, The singular value decomposition (SVD) is an algorithm for finding approximations to matrices.

The algorithm was originally published by Vladimir N. Vapnik and Alexey Ya. Chervonenkis in 1964, as a way to approximate large sparse systems of linear equations.

This method may be used when one does not know what form these solutions take; it will find solutions even if they are irrational or non-numerical in nature.

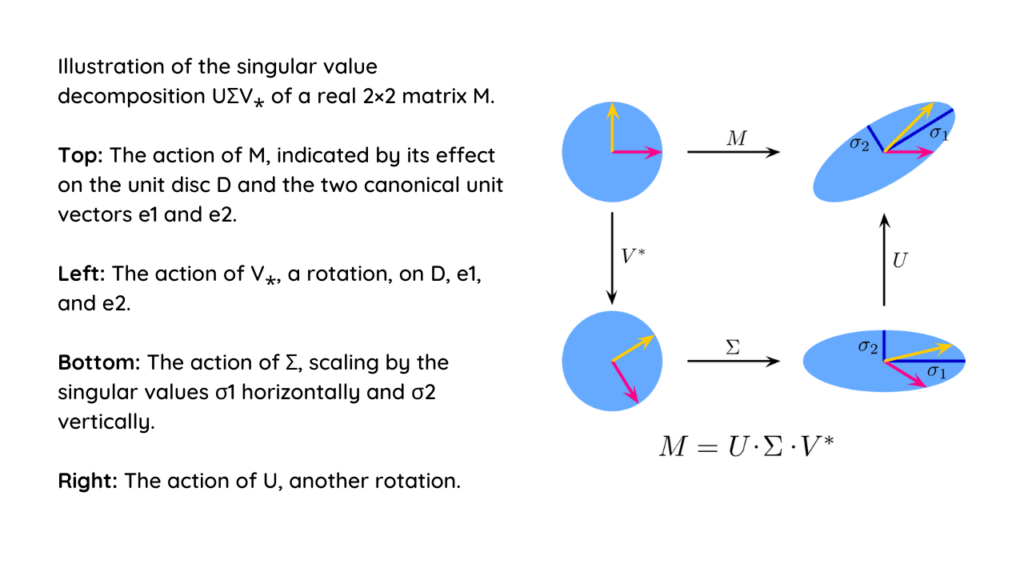

How SVD looks like Visually?

The SVD is an elegant, fast and effective algorithm to decompose a matrix into a product of 3 other matrices, but it doesn’t have any visual representation.

Here I will explain how you can decompose a matrix into three parts with formula while showing what you are doing graphically.

If you don’t know anything about SVD in math, don’t worry about that for now, Let me just say that if you want to do some basic data cleaning (i.e., removing unnecessary information from your data).

Then SVD can help out immensely by reducing thousands of variables down to just hundreds and giving us useful information about each one at a time.

You might need to use machine learning techniques later on though as we only get so much information back from our analysis.

Properties of Singular Value Decomposition

SVD is a factorization of A into three matrices: U, S, and V. The following are some of the main properties that make it very useful for applications like data science.

It is easy to see that if a singular value λ of matrix A is small (close to zero), then in S(A) we have: ∀i=j, sivij = 0. Therefore, all rows of U will be orthogonal with respect to all columns of V as well as vice versa.

As a result, all columns of S will be orthogonal with respect to all rows of V as well as vice versa.

Furthermore, uii = 0 since the corresponding row and column would have the same sign in the squared modulus.

Thus, row i of U consists only of zeros followed by one non-zero entry equal to λi while column j of V consists only zeros followed by one non-zero entry equal to σjj which corresponds to the smallest singular value λ in matrix A. That means that S is just a permutation of elements in A and V is therefore diagonal.

Since V is diagonal, we can say that vii must always be zero because its largest element in each row must also be equal to its smallest element such that any deviation from these extreme values will cause a violation of orthogonality.

Additionally, let n denote an arbitrary number of rows or columns such that: u v ≤ nδ where · denotes absolute value.

Applications of Singular Value Decomposition

The Singular Value Decomposition (SVD) is a factorization of an NxN matrix A into 3 matrices S, U, and V such that: where I is an identity matrix and U is upper triangular.

Skip-gram Model: We will use the Skip-gram model to find out how words are related to each other.

If we find close words, we know that they might be similar in meaning as well, We first use word2vec to build up our vector representation of word embeddings and then apply the Skip-gram model to perform our word similarity analysis.

We also analyze results to see if any context information is needed to effectively use the Skip-gram approach.

Neural RNN: Neural RNN is a popular method in the machine learning community because of its promising results on natural language processing tasks.

Based on current research, many researchers argue that stacking LSTM or using gated recurrent units improves results further than using vanilla RNN.

Related Article: What is an Artificial Neural Network (ANN)?

How to Use Singular Value Decomposition?

Singular value decomposition (SVD) is a powerful matrix factorization technique for understanding and working with data.

We’ll see that it helps us understand relationships between different subsets of our dataset, quickly compute solutions for systems of linear equations using singular value decomposition, and uncover certain structures about linear transformations.

In short, singular value decomposition (SVD) can help us build more intelligent models than just based on simple correlations between variables.

At its core, SVD computes a low-rank approximation of data where important relationships remain easy to interpret.

Essentially, computing singular value decomposition lets you peek into your dataset and pull out those elements that are particularly important or salient (and discard any noise).

Because we know how much every single variable contributes to each reconstructed variable usually via trace statistics we can also get an immediate sense of their relative importance when making sense of our modeling results and deciding which parts may be irrelevant or not.

Related Article: What is Q learning? | Deep Q-learning

References of Singular Value Decomposition

An Illustrated Guide, N. E. Steenrod (1948), The Singular Value Decomposition and Related Problems, W. H. Press et al., SIAM Review, Volume 21 (1979), A Factorization of a General Matrix into Three Matrices with Applications to Systems of Linear Equations and Regression Analysis by Radojica Velickovic, Bull. Inst. Math. Acad. Sinica, Vol 10, No 2 (1959) pp 155-163,

Scalar Decompositions: Application to Principal Components Analysis from William S. Cleveland, Practical Computing in Statistical Science (1993). Check out pages 282-283.

Standard Form of a Singular Value Decomposition: Standard form is an alternative way of representing a singular value decomposition.

The mathematics behind Principal Component Analysis and how it relates to Singular Value Decomposition are fascinating.

A great reference for more information is A Tutorial on Singular Value Decomposition and Multidimensional Scaling by Lawrence Shepp and Christopher Cerf, The American Statistician (March 1982), Vol 36 Issue 1, pp 23-34.

Conclusion

SVD is widely used in machine learning, statistics, image processing, and a bunch of other areas.

It also has some important applications in data science, such as dimensionality reduction, ordinal regression, and topic modeling.

DataScience Team is a group of Data Scientists working as IT professionals who add value to analayticslearn.com as an Author. This team is a group of good technical writers who writes on several types of data science tools and technology to build a more skillful community for learners.